Our Research Contributions

Our Research Contributions

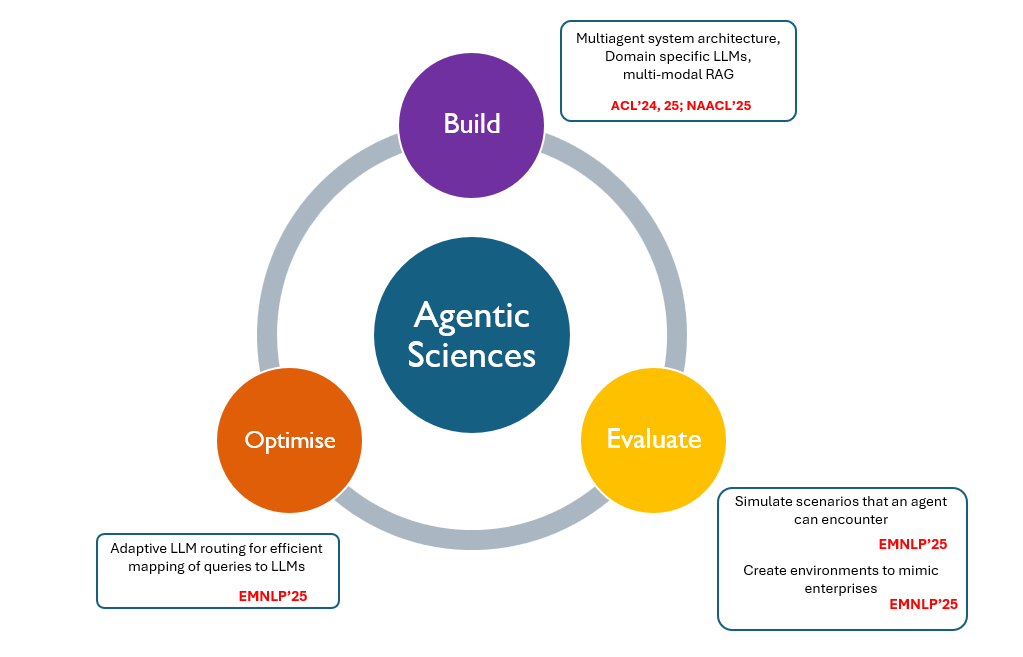

Our team focuses on developing next-generation agentic AI systems that can reason, plan, and execute complex tasks autonomously. We work at the intersection of large language models, multi-agent systems, and behavioral evaluation frameworks.

Our research spans novel evaluation methodologies for compound AI systems, contextual routing mechanisms for optimized model selection, and frameworks that assess AI behaviors rather than static benchmarks.